KNN is rarely to use in practice, but it allows us to understand classify problem.

Nearest Neighbor Classifier:

Nearest Neighbor Algorithm has two ways to classify, L1 Distance and L2 Distance.

L1 Distance is Manhattan Distance.

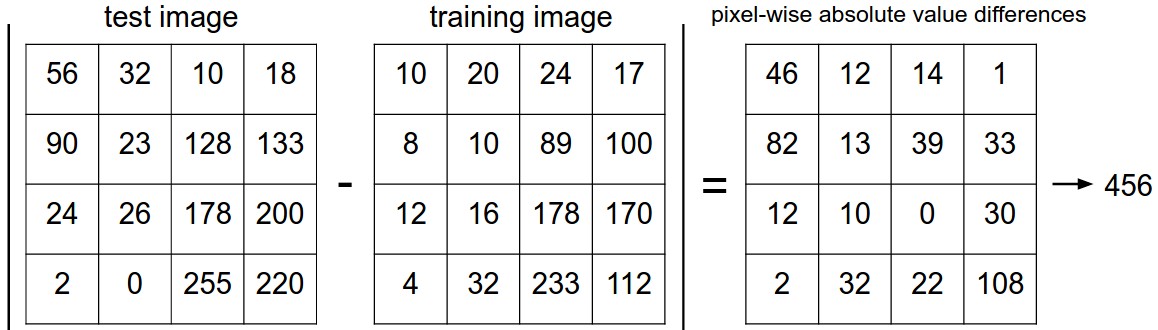

This formula represents distance between test image and training images.

I1 is test image, I2 is training image. test set has p images. Thus, distance of test image to all image is the sum of right side. The computing process like below:

L2 Distance uses square root to obtain distance between test image and training images. L2 Distance is Euclidean Distance

Here is the code to build a Nearest Neighbor.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30import numpy as np

class Nearest_Neighbor(object):

def __init__(self):

pass

def train(self, X, y):

self.Xtr = X

self.ytr = y

def predict(self, X):

""" X is N x D where each row is an example we wish to predict label for """

num_test = X.shape[0]

# lets make sure that the output type matches the input type

Ypred = np.zeros(num_test, dtype=self.ytr.dtype)

# loop over all test rows

for i in range(num_test):

# find the nearest training image to the i'th test image

# L1 distance

l1_distances = np.sum(np.abs(self.Xtr - X[i, :]), axis=1) # acc: 38.6%

# L2 distance

l2_distances = np.sqrt(np.sum(np.square(self.Xtr - X[i, :]), axis=1)) # acc: 35.4%

min_index = np.argmin(l1_distances) # get the index with smallest distance

print("min_index:", min_index)

Ypred[i] = self.ytr[min_index] # predict the label of the nearest example

print(Ypred[i])

return Ypred

K-Nearest Neighbor Classifier:

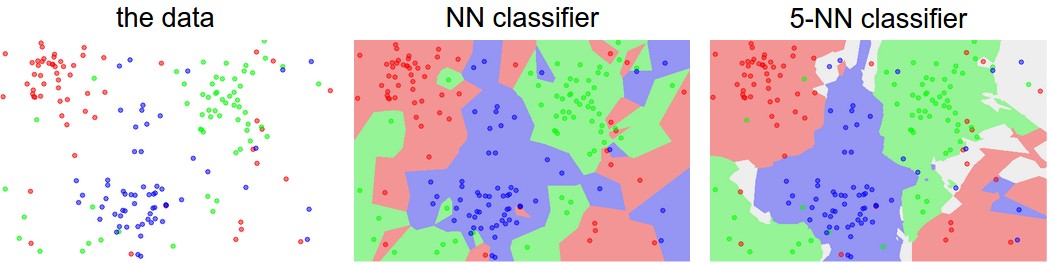

KNN is easy to understand. When K=1 the classifier shows above. When K = 5, classifier gets the top 5 closest images. Intuitively, higher value of K has a smooth effect that makes classifier resistant the outliers.

Pros and Cons of Nearest Neighbor Classifier:

KNN:

Pros:

Simple to implement and understand.

Cons:

1.Pay much computational cost at test time, but takes no time to train.

2.Remember all training set and store it for future use.

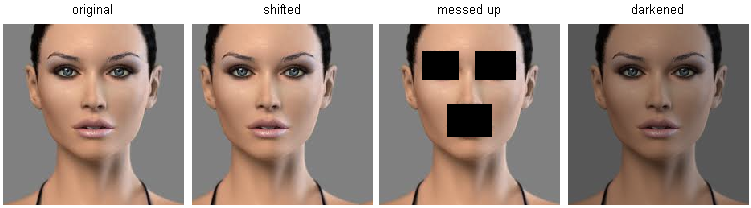

3.Images that only has difference in light intensity are very different from pixel-based L2 computation.

Deep neural networks are very expensive to train, but it is very cheap to classify a new test example one the training is finished. This mode of operation is much more desirable in practice.